The AI that’s going to replace you is copying your work

Three years ago, when I gave a talk entitled “Will AI kill human creativity?” in Bend, Oregon — not one person in the 600-seat auditorium could have dreamt the technology to make such a reality possible would arrive within the decade (let alone the next five years).

The advent of NLP-based technologies like DALL-E, Stable Diffusion, and Midjourney has taken the internet by storm, as the dawn of the AI era begins: the fifth industrial revolution is upon us.

When most people talk about the risks of AI, it’s generally regarding labor concerns, the existential threat of AGI, or something else (seemingly) far-removed from today’s reality.

But I’d contend these views overlook a glaring, near-term risk that is popping up across popular AI applications: IP theft.

Although we don’t seem to have reached the point (yet) where AI could entirely replace human creators (today’s most libraries still revolve around human-generated prompts), we have surpassed the point where AI can infringe upon creator’s rights:

Example 1. GitHub Copilot

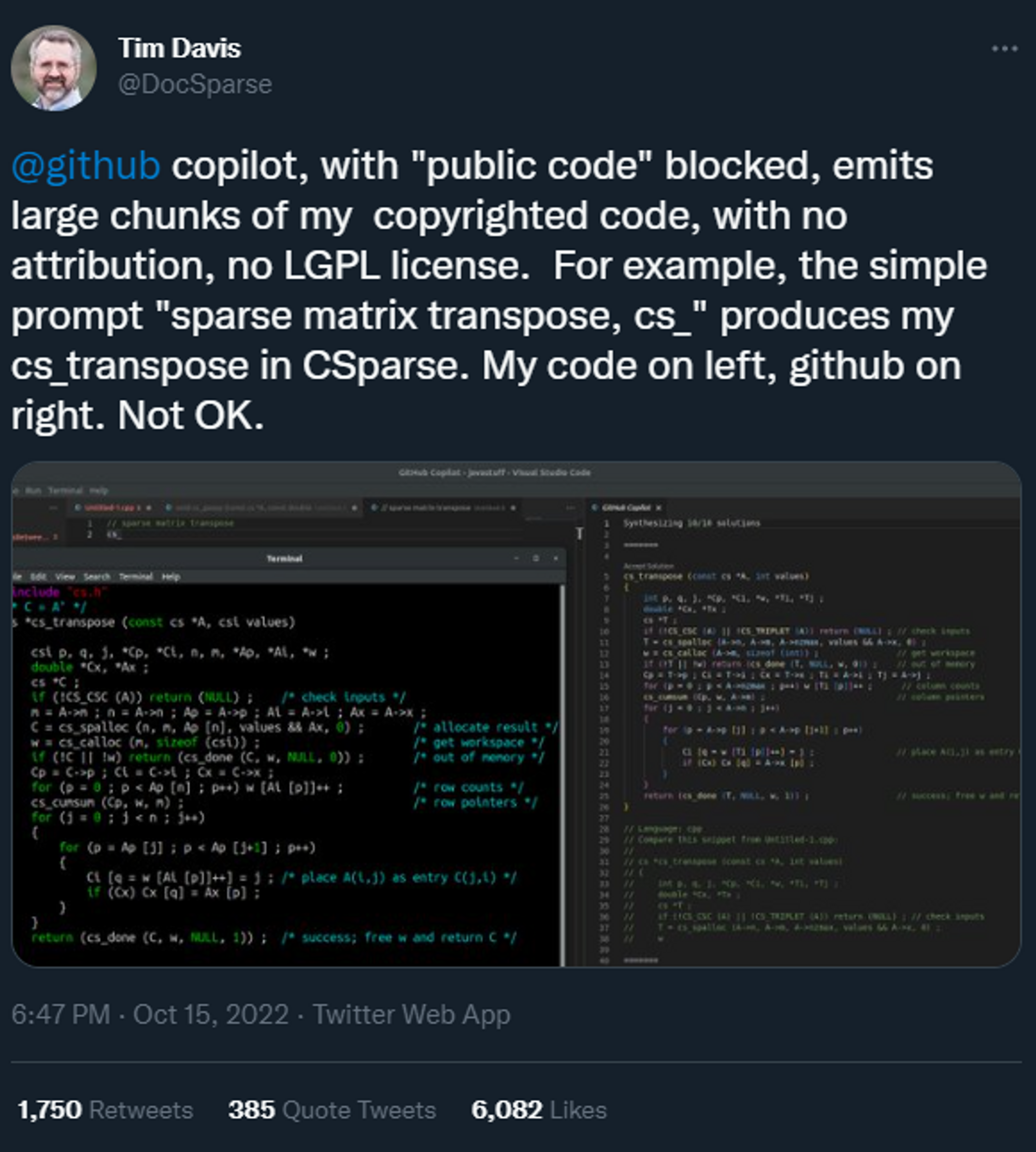

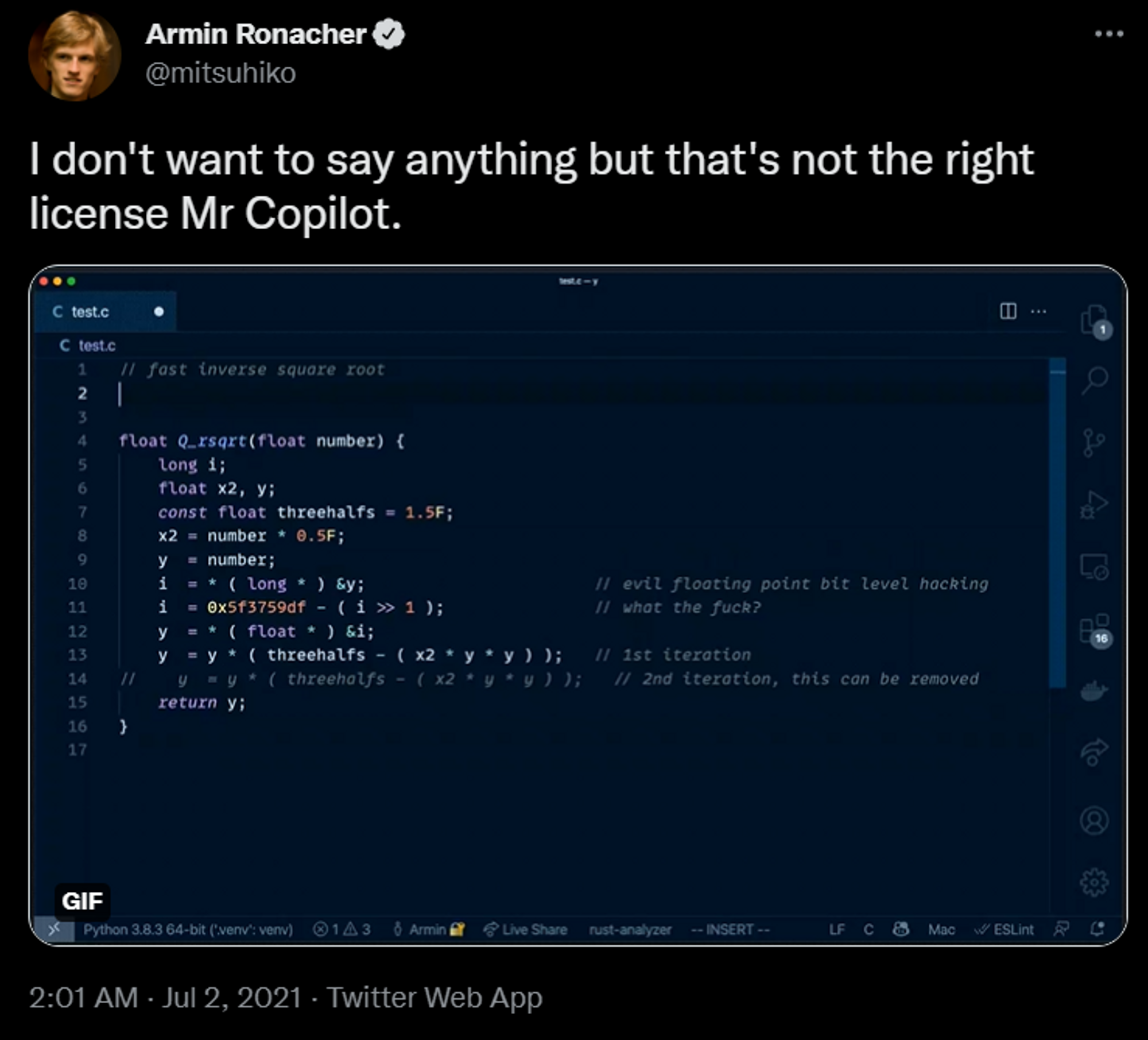

GitHub Copilot is a paid AI service that acts as a “pair programmer” offering autocomplete-style suggestions as you code. Copilot was reportedly trained on public source code, but it has been caught generating copyrighted code in several instances:

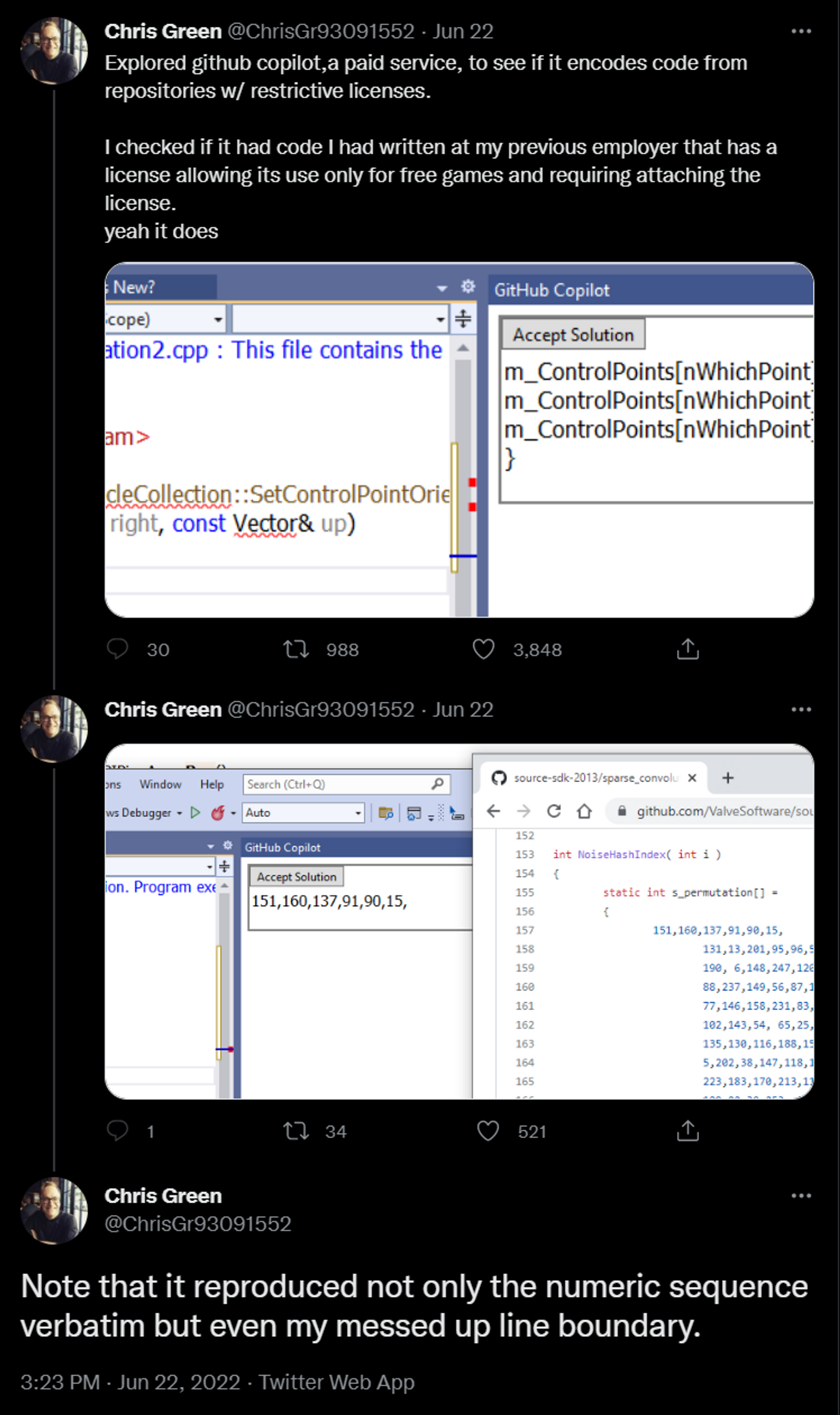

Multiple developers (examples below) have discovered that Copilot reproduces proprietary code with restricted licenses from private repositories— even copying minutiae like code comments, variable names, and odd line boundaries.

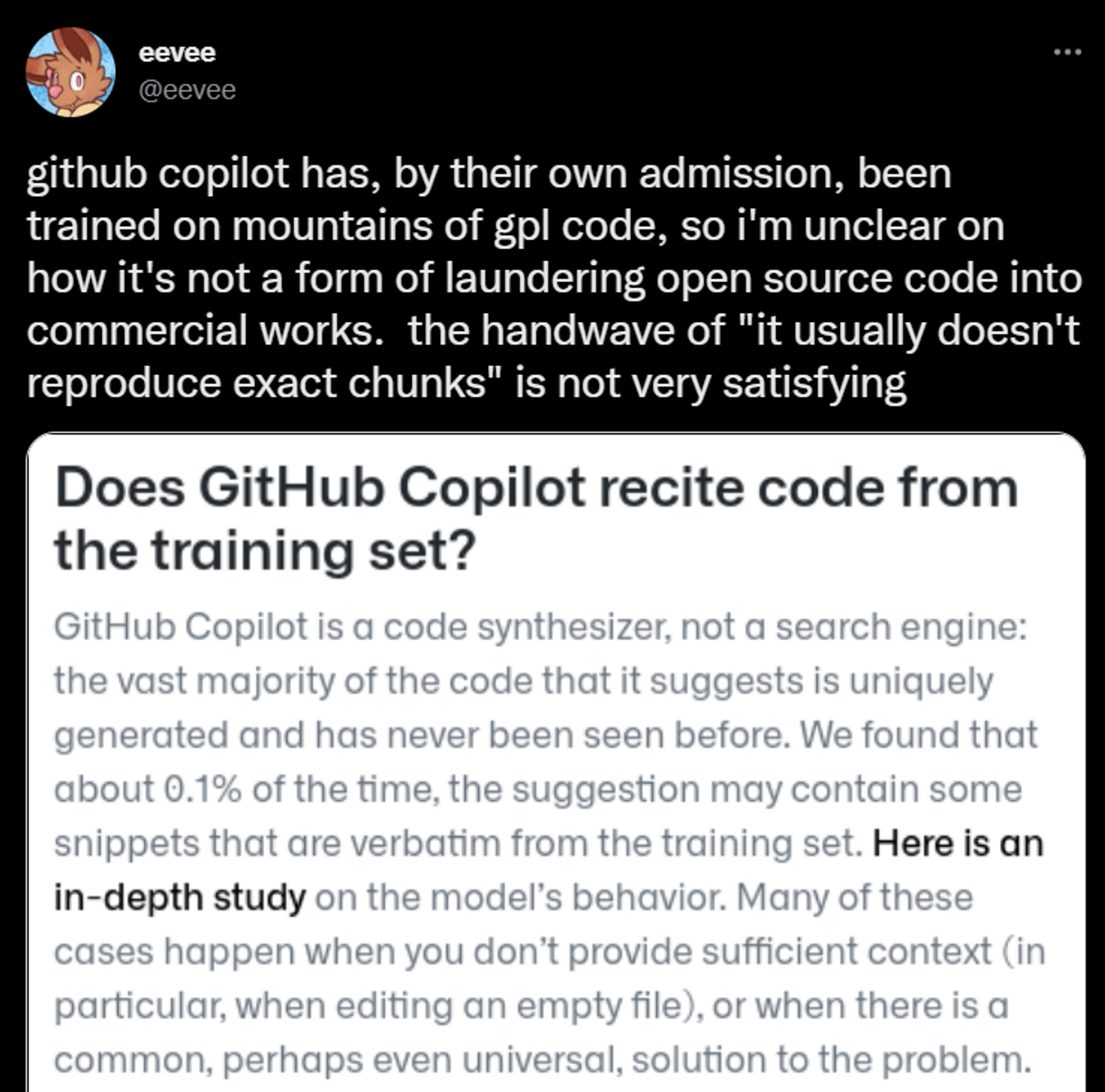

GitHub claims that Copilot suggestions only contain verbatim snippets 0.1% of the time, but the examples are undeniable: Copilot directly reproduces unlicensed commercial code.

However, most would contend this isn’t illegal (yet). The precedent was set long ago, in Authors Guild v Google which ruled that a machine reading your code/book/movie transcript isn’t copyright infringement, unless you’re publishing a non-trivial reproduction of someone else’s code. Some may argue that Copilot copying a few lines of code is trivial, but that will be up to the courts to decide:

Matthew Butterick, (lawyer, designer, and developer), announced recently he is working with Joseph Saveri Law Firm to investigate the possibility of filing a copyright claim against GitHub. Several open-source groups & developers have disavowed using Copilot, and larger corporations may soon follow suit, given the potential legal risks involved.

Example 2. Stable Diffusion & Greg Rutkowski

Greg Rutkowski is an artist with a distinctive style, known for illustrating fantasy scenes. Stable Diffusion is an AI text-to-image tool, similar to DALL-E and Midjourney.

Rutkowski’s name has reportedly been used to generate around 93,000 images using Stable Diffusion. Now anyone can create original artwork in his distinctive style in just minutes (or even seconds) by simply typing in a few words as directions:

This isn’t outright IP theft, but it’s obvious why text-to-image AI tools have provoked the ire of artists around the world. Once again, today’s US copyright law only protects artists against the reproduction of their actual artworks, not from someone mimicking their style. For individual artists like Rutkowski, there seems to be little potential for recourse — “A.I. should exclude living artists from its database,” he says.

While OpenAI prohibits the use of images of celebrities or politicians, there are not yet rules in place to protect specific artist’s names being used, let alone informing artists that their work has been used in training data.

Example 3. Stock Photography & Stable Diffusion

StockAI is an AI-powered stock photo provider, built on Stable Diffusion and launched by Danny Postma in September 2022.

Soon after the launch, remote developer Montes caught one of the photos (now removed from the site, pictured below) which included a warped artifact of a watermark for Dreamstime, a popular stock photo website. One comment suggested it appeared as though the AI simply “warp[ed] a copyrighted photo to somehow make it new.” Stable Diffusion’s creators did not comment.

More recently, Matt Visiwig generated similar results while using Stable Diffusion directly with the prompt “vector art drawing of a monkey playing the drums in black and white”, which twice generated images with iStock watermarks:

Many assume that AI copyright infringement will go unprosecuted since it primarily targets individual creators, but examples like the above suggest that established companies (such as Dreamstime and iStockPhoto) also have a stake in this brewing legal storm.

Is today’s AI just Ruth-Goldberg IP theft?

This isn’t to say that I’m “black-pilled” on AI applications as a whole. There are very exciting applications of today’s AI tools that promise to broaden the horizons of human creativity, such as outpainting, inpainting, creating 3D videos from 2D photos, and more.

However, like every other groundbreaking technology, creative AI technology introduces ethical gray areas that should be more carefully assessed — for instance, the appropriate limitations to prevent copyright infringement & IP theft.

Enjoyed reading this?

Sign up to receive new posts below. No spam — you’ll receive less than one email per month, maximum.